Definition Ĭoherence defines the behavior of reads and writes to a single address location.

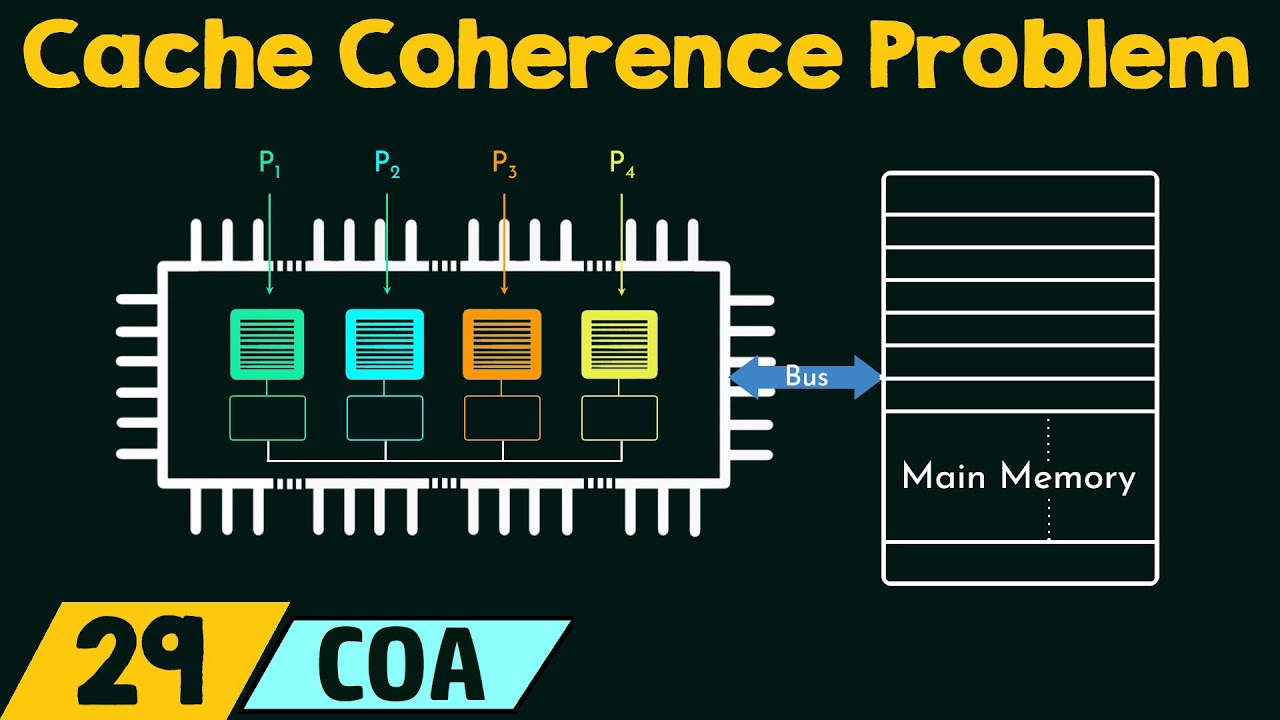

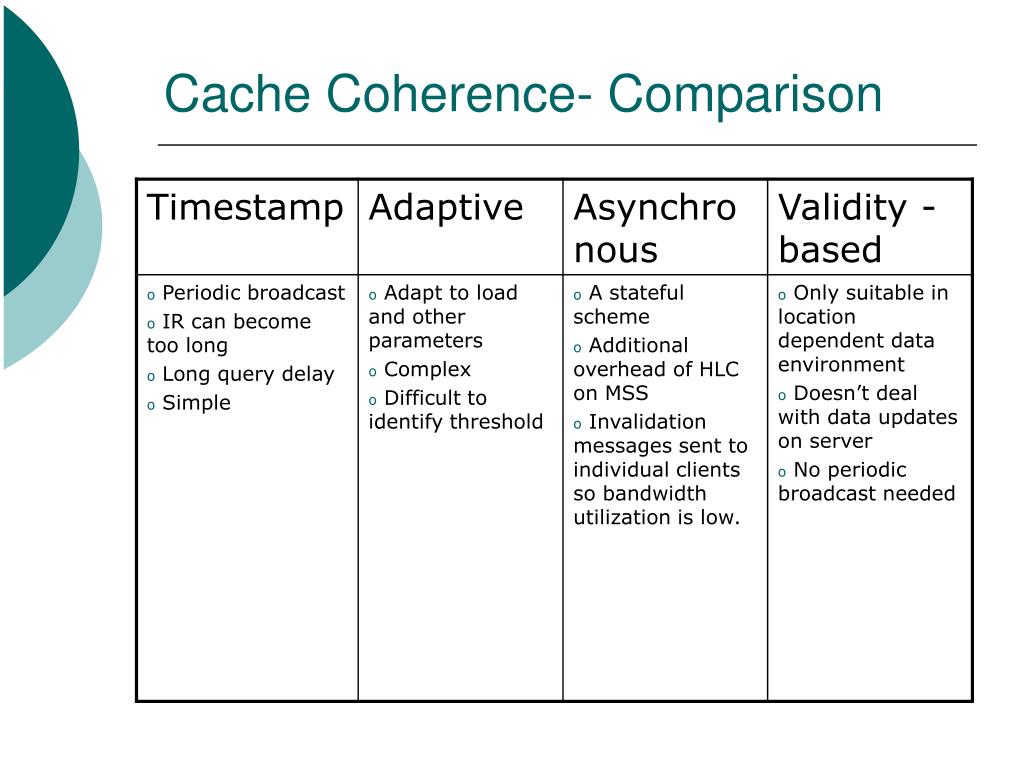

However, in practice it is generally performed at the granularity of cache blocks. Theoretically, coherence can be performed at the load/store granularity. Transaction Serialization Reads/Writes to a single memory location must be seen by all processors in the same order. The following are the requirements for cache coherence: Write Propagation Changes to the data in any cache must be propagated to other copies (of that cache line) in the peer caches. Cache coherence is the discipline which ensures that the changes in the values of shared operands (data) are propagated throughout the system in a timely fashion. When one of the copies of data is changed, the other copies must reflect that change. In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. Cache coherence is intended to manage such conflicts by maintaining a coherent view of the data values in multiple caches.Ĭoherent caches: The value in all the caches' copies is the same. Suppose the client on the bottom updates/changes that memory block, the client on the top could be left with an invalid cache of memory without any notification of the change. In the illustration on the right, consider both the clients have a cached copy of a particular memory block from a previous read. When clients in a system maintain caches of a common memory resource, problems may arise with incoherent data, which is particularly the case with CPUs in a multiprocessing system. In computer architecture, cache coherence is the uniformity of shared resource data that ends up stored in multiple local caches. Requires large amounts of bandwidth and resources compared to directory based coherence and bus snooping.Computer architecture term concerning shared resource data An illustration showing multiple caches of some memory, which acts as a shared resource Incoherent caches: The caches have different values of a single address location. Snarfing: Self-monitors and updates its address and data versions. Used in smaller systems with fewer processors. When memory area data changes, the cache is updated or invalidated.īus snooping: Monitors and manages all cache memory and notifies the processor when there is a write operation. Several types of cache coherency may be utilized by different structures, as follows:ĭirectory based coherence: References a filter in which memory data is accessible to all processors.

Program order preservation is maintained with RW data.Ī coherent memory view is maintained, where consistent values are provided through shared memory. The following methods are used for cache coherence management and consistency in read/write (R/W) and instantaneous operations: DSM systems use a weak or release consistency standard. The majority of coherency protocols that support multiprocessors use a sequential consistency standard. To maintain consistency, a DSM system imitates these techniques and uses a coherency protocol, which is essential to system operations.Ĭache coherence is also known as cache coherency or cache consistency. Different techniques may be used to maintain cache coherency, including directory based coherence, bus snooping and snarfing.

0 kommentar(er)

0 kommentar(er)